I like to start most of my analysis pieces with historical data. It might be because I’m old or it might just be because I tend to apply a timeline-specific approach to the way I analyze things. Either way, it’s time to flash back into history to discuss the way things once were. For fun, let’s pretend we’re going back in the time in a DeLorean.

Applications In The Dark Ages

Applications and their composition used to live in the enterprise datacenter. Organizations would have racks upon racks of hardware running software that was created or purchased to deliver value to customers. It took multiple teams of people to build and create and maintain these systems and datacenters around the clock.

Eventually, the cloud and containerization was “born” and people started moving these workloads out of the datacenter.

The Death of the Datacenter - Lift and Shift Time

Movement to the cloud meant way fewer physical and human resource requirements for companies compared to running all their own systems locally in their own datacenter. Together, infrastructure teams worked with the application development groups to take the applications and wrap them in a single container. Everything had gone virtual and we had abstracted away the need for lower-level infrastructure specific glue. The container provided the ability to move entire applications to any cloud system and have it just work. We essentially lifted and shifted our applications straight into the cloud, without any redesign.

BOOM - The Atomization of the Application

Lift and shift brought significant improvements to how enterprises created and maintained their applications, however it didn’t scale. As traditional infrastructures scaled and software-based businesses grew beyond the capabilities of the physical hardware, we just bought additional hardware. In the cloud we needed to transition to cloud native services to achieve similar scale improvements. Done right, all sorts of cloud native capabilities allow us to scale applications faster, easier, cleaner, and more efficiently (and at lower cost).

So we began the process of breaking down our applications. Moving the applications into smaller and smaller bite-sized chunks allowed the system to flex in ways that it couldn’t before. If an individual feature or function was the biggest bottleneck in an application, we could break it out, give it a cloud-native infrastructure backing, and allow it to scale up and down as required by the overall system, no matter how complex it was.

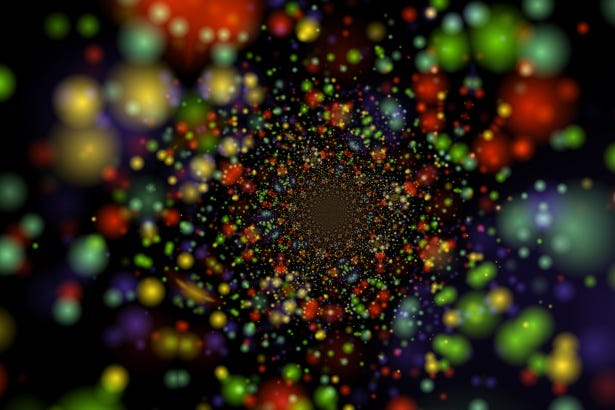

I like to view this as the “Application Big Bang.” It was the moment the world realized that scale doesn’t come from a lift-and-shift model but instead requires true atomization of applications to return the best possible efficiency.

Enter APIs, Microservices, and Service Mesh Models

The end state of the atomization of applications looks massively different then when we began moving our applications to the cloud. What once was one application may now be fifty or more. Each of those components is broken down to the individual function level, taking intra-process communications and turning them into an exponential growth of communication paths between micro “applications.”

This can be seen in the direct growth of the microservice-based model of application design. Ultimately this is the best way to build an application, as it allows for scale, plug and play interchangeability of functional components, smaller upgrades without taking the entire system down, and a natural fit to cloud-centric models in a “micro application” by “micro application” basis.

One can even go so far as to turn this into a full service mesh-based approach, where application components go up and down as needed by the primary application core. They are controlled and spun up on an as-needed basis, with each component registering with a service mesh controller in the center of the mix.

Chances are you are nowhere near this stage. Most companies are not. This is a future state that you should watch closely because you’ll be here eventually.

The Impact on the Future of Cyber Security

The atomization of the application will have a massive impact on cybersecurity. Here are just a few ways in which these tectonic plates of change are causing shockwaves in how cybersecurity teams execute on a day-to-day basis.

The concept of zero trust (the real concept of zero trust, not the stupid marketing term) becomes increasingly important to the security of this new environment. If we break the application down into the functional component level, you must make sure that each component has its own trusted verification method and that every communication between the individual atoms of the application are continuously authenticated, authorized, and secured.

Because of the Atomization of Applications, API security becomes one of the most important security technologies in the next 5 years. Everything you do will use APIs. Applications we build, applications we atomize, even libraries that we’ve coded to in the past will become SaaS-based APIs as we begin to code our applications to a services-based model.

Additionally, application security as a whole will begin to revolve around API-centric concepts. As applications shrink into microservices, how application security programs have been traditionally executed, from static analysis all the way through to runtime protection of workloads, will drastically change as well.

Security operations and incident response will have to learn yet another model —just when we think we’ve got security operations solved, the standard operating procedures get pulled out from beneath us. Now, instead of focusing on application security vulnerabilities, tracking authentications to a single web application-based interface and analyzing traffic to and from a small number of targets, we will have to learn how to sift through hundreds (if not thousands) of APIs to analyze activities and actions occurring in this complex system. Everything from logging to incident handling will be reinvented and rethought.

Parting Shot - Live Through the Tsunami

As we noodle on the breadth of impact from the impending API onslaught, it’s easy to become overwhelmed and scared that we will soon be underwater, being swept into uncharted operational territory. The reality is that shifts in the technology landscape have happened multiple times before and we’ve dealt with waves of new security approaches — we moved our organizations to software-as-a-service and learned how to apply security to things we didn’t own, we solved the problem of mobility in the enterprise, we even tackled shifting attacker landscapes and (god forbid) ransomware problems.

Just like all previous changes (or evolutions), we will learn, adapt, and shift how we operate. And we will ride the tide to a safer shore — if we approach this shift pragmatically. However, heeding early warning signals will allow you to put out the sandbags before your entire organization is under water. We can do this by studying the lessons of the past and applying our new knowledge to our eventual and inevitable API- and application-based not-so-distant future.

Special thank you to

and for helping me to write and edit this article. I appreciate you both!

Cool post! Thanks for sharing! Interesting to note that "atomization" is happening everywhere across the digital value stream. It's not just applications -- but it's also data, structured and unstructured. Shrinking governance and security controls down to the microservices level is quite similar to shrinking governance and security controls down to individual data objects.

In the old days we invested in protecting singular monolithic apps and defending a singular enterprise perimeter. In the future we must govern security and risk associated with the atomic services that make up our applications -- and also the atomic particles of data that constitutes a huge part of modern the attack surface.